Some styles aren’t something we can describe in words, and it isn’t something the model would be trained on. We need a way to provide an image and tell the model “do something like this”.

We can use T2I-Adapter on top of Stable Diffusion to allow for a style image as an additional input.

Using the technique listed in Style Transfer From Text In Stable Diffusion, we just add one more step to provide a style image.

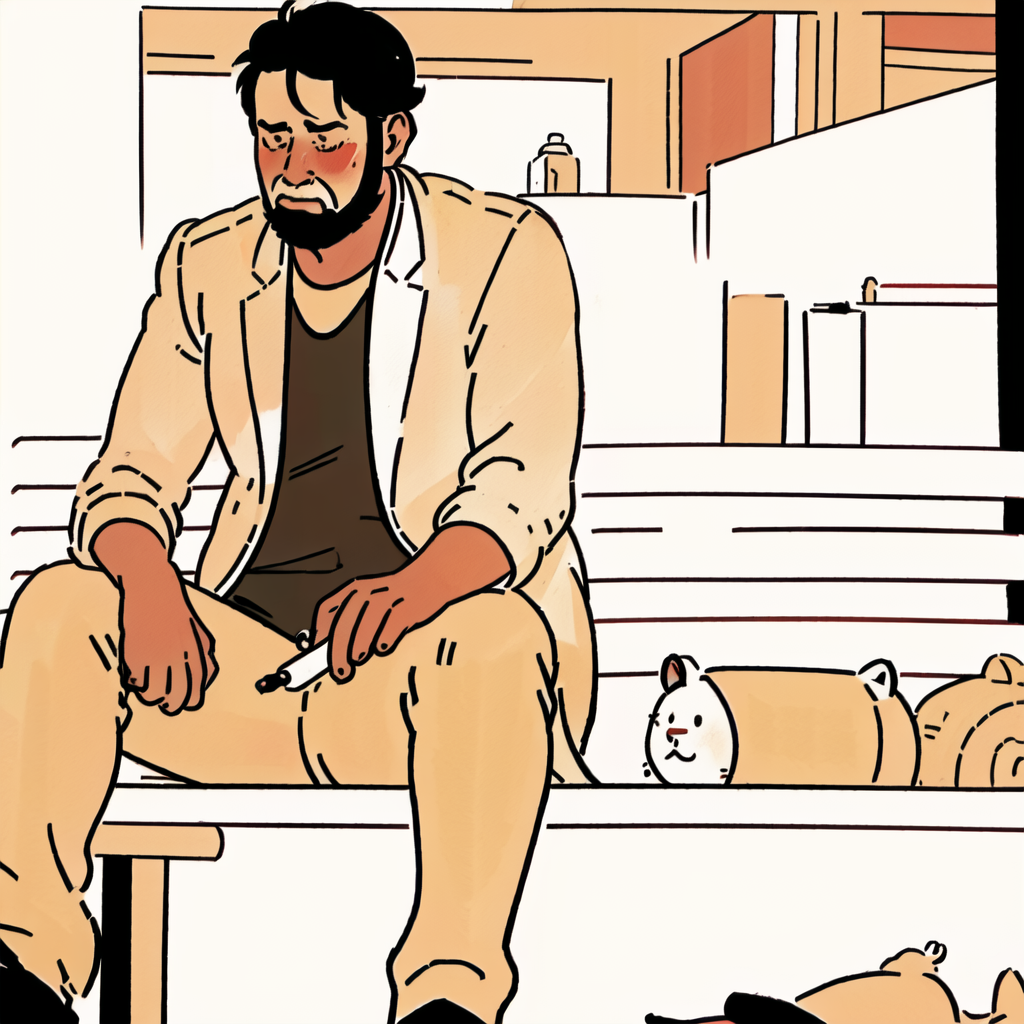

Example prompt: Sad Keanu in the style of minimalist line art

Model: AOM3+orangemix vae Sampler: Euler a, 20 steps 3 ControlNets:

- depth w/ original image

- openpose_full w/original image

- t2iadapter_style w/ style image

Prompt:

[basic prompt (e.g. man in jacket sitting)] lora:add_detail:-0.5 cartoon fanart style lora:cartoon_fanart_style:0.5 Simple line lora:20.50:0.5

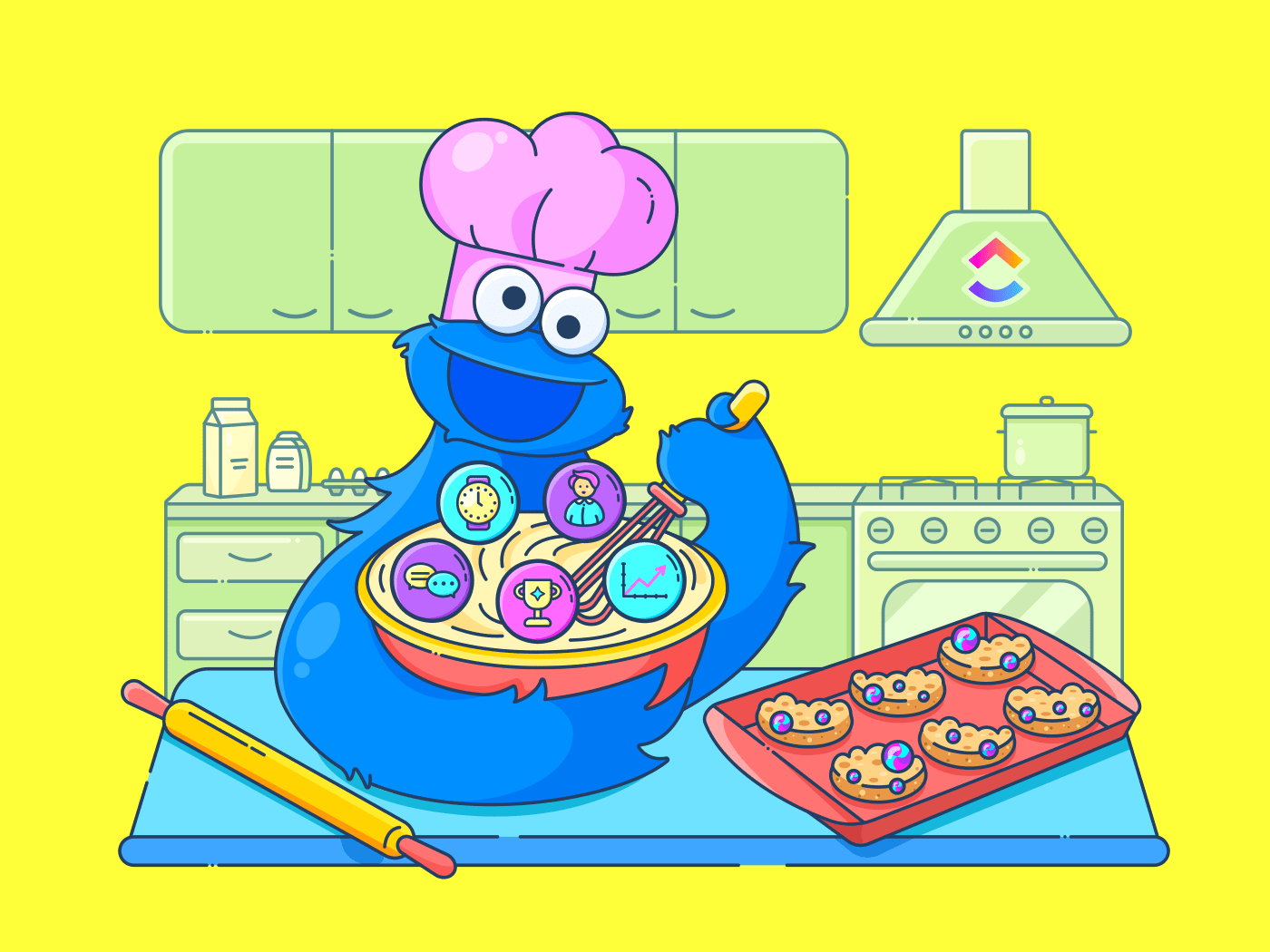

Notice that the style image impacts both the color and content of the scene.

| Style Image | Output |

|---|---|

| [none] |  |

|  |

|  |

|  |

.png) |  |